Eureka 提供基于 REST 的服务,在微服务体系中用于服务管理 。 Eureka 提供了基于 Java语言的客户端组件,客户端组件实现了负载均衡的功能,为业务组件的集群部署与解构提供了便利。使用该框架,可以将业务组件注册到 Eureka 容器中,并且业务组件可进行集群部署, Eureka维护这些服务组件的列表并自动检查它们的状态 。

SpringBoot版本为2.1.5.RELEASE

SpringCloud版本为Greenwich.SR1

前言

关于微服务

微服务一词来自 Martin Fowler 的 《Microservices》 一文,微服务是一种架构风格,将单体应用划分为小型的服务单元 。在此不做过多讲解。

关于SpringCloud与Netflix

关于 Netflix OSS

Netflix 是一个互联网影片提供商,在几年前, Netflix 公司成立了自己的开源中心, 名称为 Netflix Open Source Software Center,简称 Netflix OSS 。这个开源组织专注于大数据、云计算方面的技术,提供了多个开源框架,这些框架包括大数据工具、构建工具、基于云平台的服务工具等。 Netflix 所提供的这些框架,很好地遵循了微服务所推崇的理念,实现了去中心化的服务管理、服务容错等机制。

Spring Cloud 与 Netflix

Spring Cloud 并不是一个具体的框架,大家可以把它理解为一个工具箱,它提供的各类工具 ,可以帮助我们快速构建微服务系统。 Spring Cloud 的各个项目基于Spring Boot,将Netflix的多个框架进行封装,并且通过自动配置的方式将这些框架绑定到Spring的环境中,从而简化了这些框架的使用 。由于Spring Boot的简便,使得我们在使用Spring Cloud 时,很容易将 Netflix 各个框架整合进项目中。 Spring Cloud下的 Spring Cloud Netflix 模块, 主要封装了 Netflix的以下项目:

-

服务发现Eureka

-

服务熔断Hystrix

-

服务路由Zuul

-

客户端负载均衡Ribbon

而除了Netflix提供的模块之外,Spring Cloud还提供了其他模块:

-

Spring Cloud Config:为分布式系统提供了配置服务器和配置客户端,通过对它们 的配置,可以很好地管理集群中的配置文件。

-

Spring Cloud Sleuth:服务跟踪框架,可以与 Zipkin 、 Apache HTrace 和 ELK 等数据 分析、服务跟踪系统进行整合,为服务跟踪、解决问题提供了便利 。

-

Spring Cloud Stream:用于构建消息驱动微服务的框架,该框架在 Spring Boot 的基 础上,整合了 Spring Integration 来连接消息代理中间件。

-

Spring Cloud Bus:连接 RabbitMQ 、 Kafka 等消息代理的集群消息总线 。

服务注册与发现Eureka

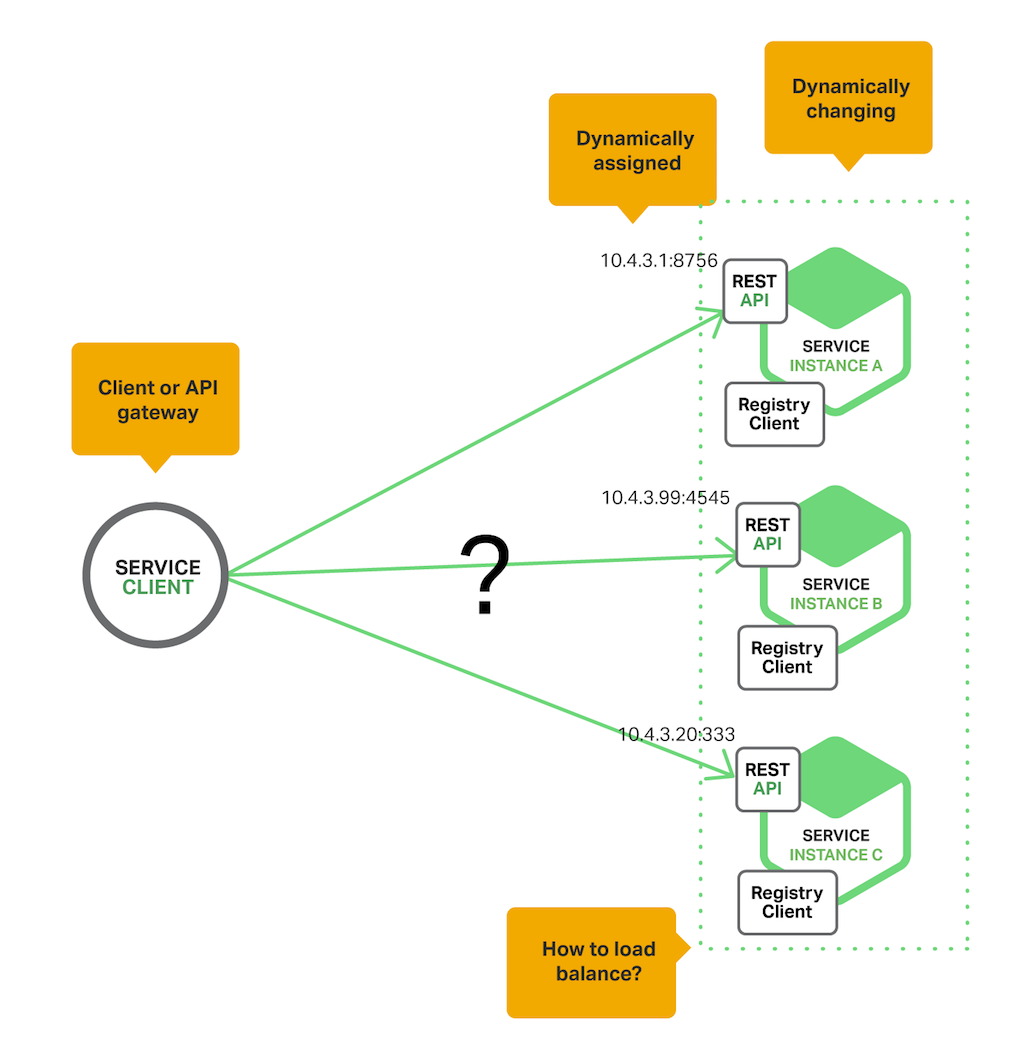

Why Use Service Discovery

https://www.nginx.com/blog/service-discovery-in-a-microservices-architecture/

Let’s imagine that you are writing some code that invokes a service that has a REST API or Thrift API. In order to make a request, your code needs to know the network location (IP address and port) of a service instance. In a traditional application running on physical hardware, the network locations of service instances are relatively static. For example, your code can read the network locations from a configuration file that is occasionally updated.

In a modern, cloud‑based microservices application, however, this is a much more difficult problem to solve as shown in the following diagram.

Service instances have dynamically assigned network locations. Moreover, the set of service instances changes dynamically because of autoscaling, failures, and upgrades. Consequently, your client code needs to use a more elaborate service discovery mechanism.

There are two main service discovery patterns: client‑side discovery and server‑side discovery.

Eureka架构

Eureka 提供基于 REST 的服务,在微服务体系中用于服务管理 。 Eureka 提供了基于 Java语言的客户端组件,客户端组件实现了负载均衡的功能,为业务组件的集群部署与解构提供了便利。使用该框架,可以将业务组件注册到 Eureka 容器中,并且业务组件可进行集群部署, Eureka维护这些服务组件的列表并自动检查它们的状态 。

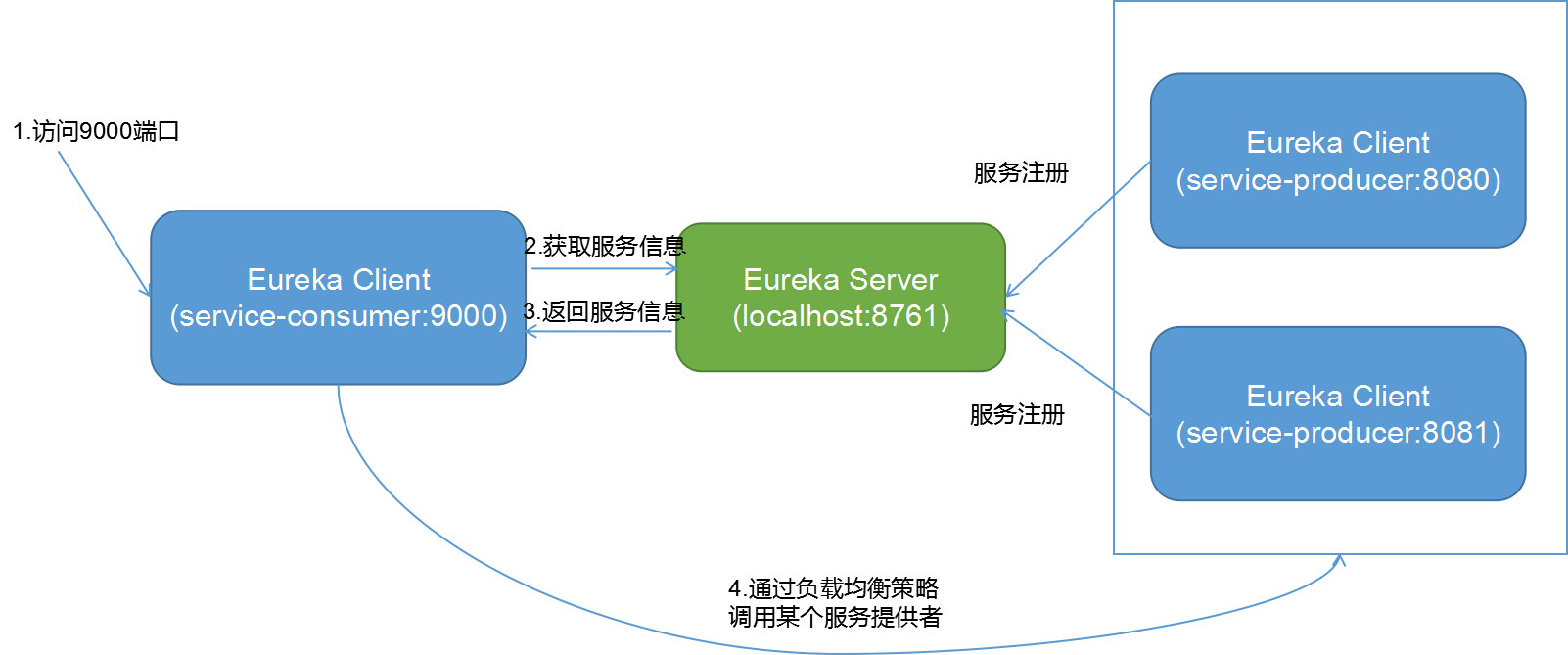

一个简单的 Eureka 集群,需要一个 Eureka 服务器、若干个服务提供者 。 业务组件作为服务提供者将自己注册到 Eureka 服务器上,其他组件作为Eureka客户端可以向Eureka服务器获取组件列表并且进行远程调用。

图中有3个Eureka服务器,服务器支持集群部署,每个服务器也可以作为对方服务器的客户端进行相互注册与复制。图中共有4个 Eureka 客户端,2个为服务提供者用于发布服务,另2个为服务调用者(或成为消费者) 。 不管是服务器还是客户端,都可以部署多个实例 ,如此一来 , 就很容易构建高可用的服务集群 。

构建Eureka服务器

建议使用Spring Initializr创建。

生成的pom.xml关键部分如下:

1 | <parent> |

加入的spring-cloud-starter-netflix-eureka-server 会自动引入 spring-boot-starter-web,因此只需加入该依赖,我们的项目就具有 Web 容器的功能了 。

在运行主类上加上@EnableEurekaServer代表启动Eureka服务器

1 |

|

本例中并没有配置服务器端口,因此默认端口为 8080 ,我们将端口配置为 8761 ,在/main/resources 目录下创建application.yml 配置文件

1 | server: |

启动该Spring Boot应用,打开浏览器,输入 http://localhost:8761 ,可以看到 Eureka器控制台,如下图所示:

编写服务提供者

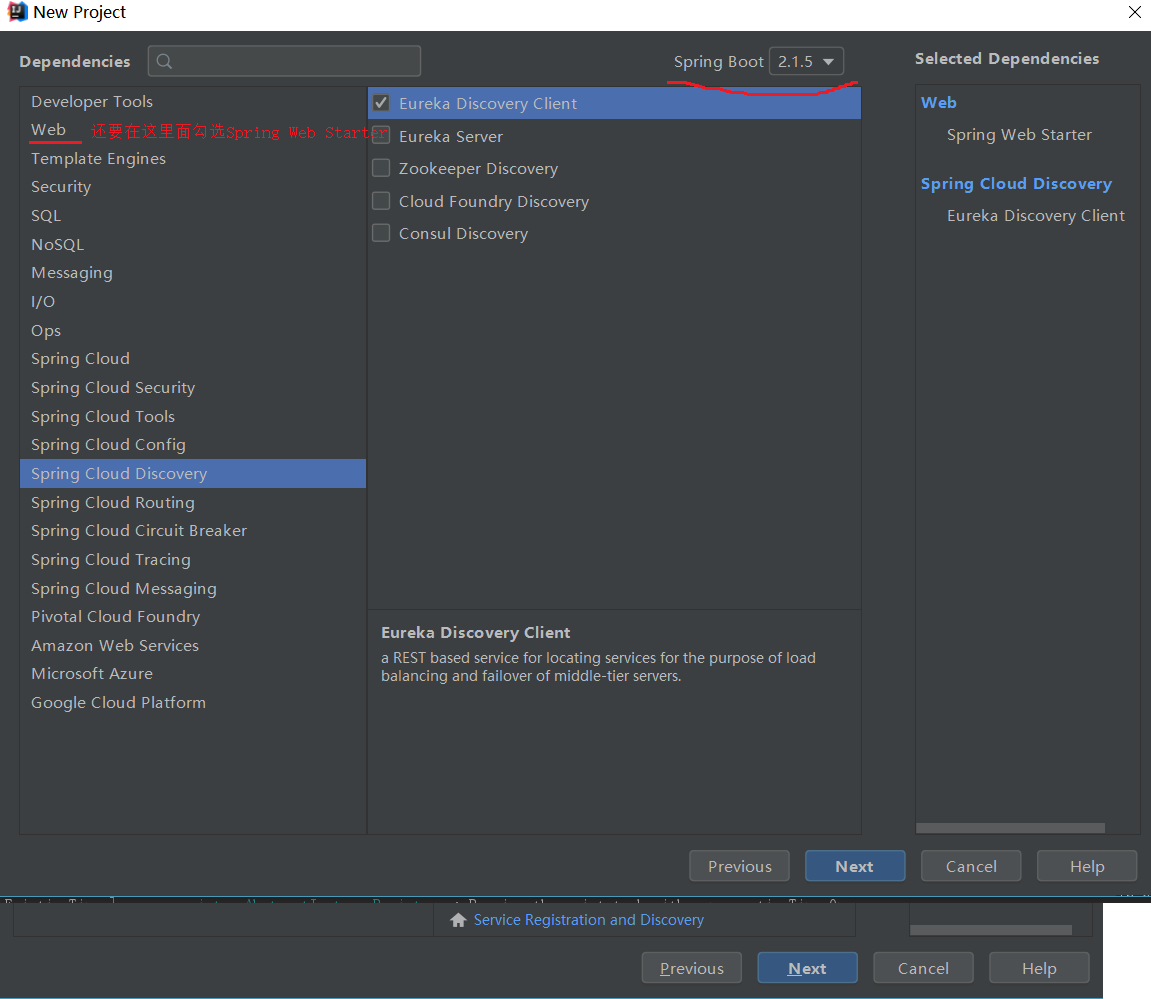

还是使用Spring Initializr创建

pom.xml

1 | <parent> |

application.yml

1 | server: |

以上配置中,将应用名称配置为service-provider ,该服务将会被注册到端口为 8761的 Eureka 服务器,也就是本节前面所构建的服务器 。另外,还使用了 eureka.instance.hostname来配置该服务实例的主机名称。

GreetingController.java

编写一个 Controller , 并提供一个最简单的 REST接口来模拟提供的服务

1 |

|

在运行主类上加上@EnableEurekaServer代表启动Eureka客户端

1 |

|

运行该SpringBoot应用两次,两次的yml中分别配置server.port为8080和8081。运行后在此查看Eureka控制台:

注意到Instances列表中显示,服务SERVICE-PRODUCER有两个状态为UP的实例。

关于EMERGENCY! EUREKA MAY BE INCORRECTLY ......可以参考自我保护模式

编写服务调用者

下面,我们编写一个服务调用者,它会通过Eureka来获取服务的地址,并进行调用。

使用Spring Initializr创建,此处与创建服务提供者时相同,都是勾选Spring Web Starter与Eureka Discovery Client。最终的pom文件也都一样。

创建一个Service层,它调用其他服务模块(在这里就是我们刚才编写的service-producer)提供的服务。

1 |

|

需要注意的是,调用服务时,仅需要通过服务名称进行调用,不需要服务提供者真正的主机名或者IP。

为了验证服务提供者返回的结果,我们创建一个Controller方便我们查看。

1 |

|

注意,在MyService中使用的restTemplate需要我们手动配置。我们在主类中一起进行配置。

1 |

|

RestTemplate 本来是 spring-web 模块下面的类,主要用来调用 REST 服务。本身并不具备调用分布式服务的能力,但是 RestTemplate的 Bean 被@Loac!Balanced 注解修饰后,这个 RestTemplate 实例就具有访问分布式服务的能力了。

另外,在启动类中,使用了@EnableDiscoveryClient 注解,该注解使得服务调用者有能力去 Eureka 中发现服务。需要注意的是,我们在服务提供者中用到的@EnableEurekaClient 注解其实包含了@EnableDiscoveryClient 的功能,也就是说,一个 Eureka 客户端,本身就具有发现服务的能力。

application.yml

1 | server: |

运行该SpringBoot应用,多访问几次localhost:9000,可以交替获得hello from provider 8080和hello from provider 8081。

其实内部是使用Ribbon组件做的客户端负载均衡。相关知识可参考Ribbon官方文档。

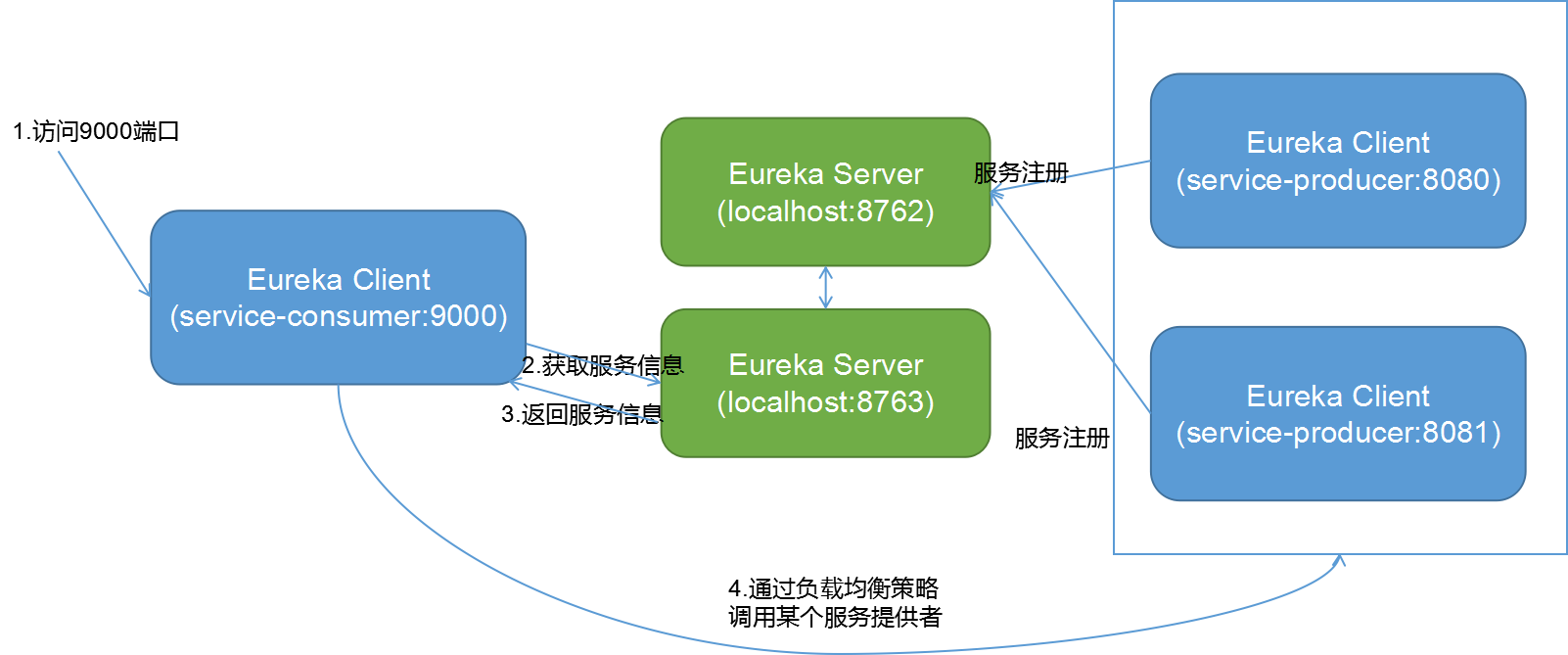

总结下,当前建立的项目之间的调用结构如下:

搭建Eureka服务器集群

现在改造之前的项目,搭建Eureka集群,实现高可用

修改Eureka服务器配置

修改Eureka服务器项目的application.yml。利用配置文件的profiles特性

1 | # 修改spring.profiles.active执行生效的profile,single对应单Eureka服务器 |

修改hosts文件中设置eureka1和eureka2的映射。

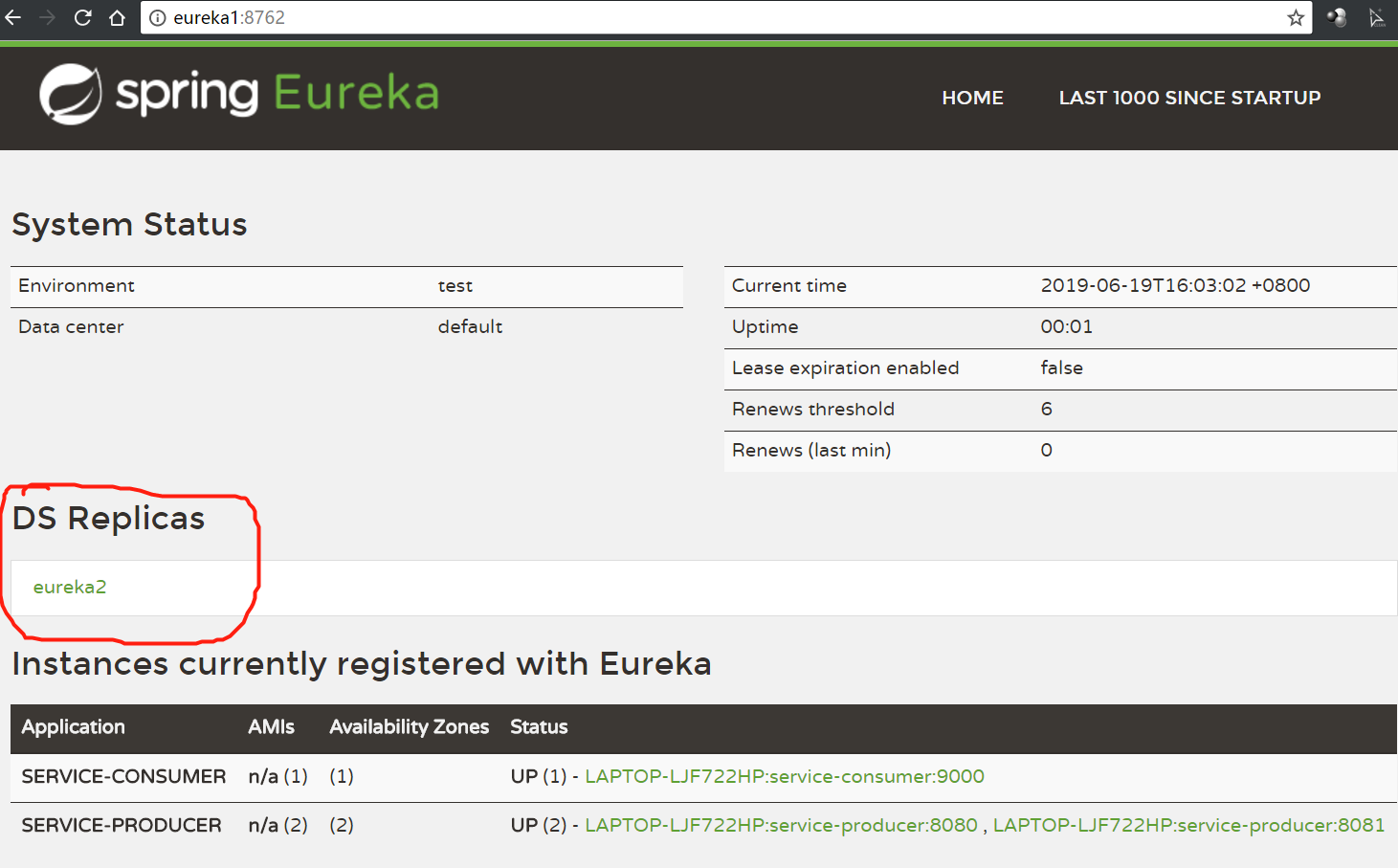

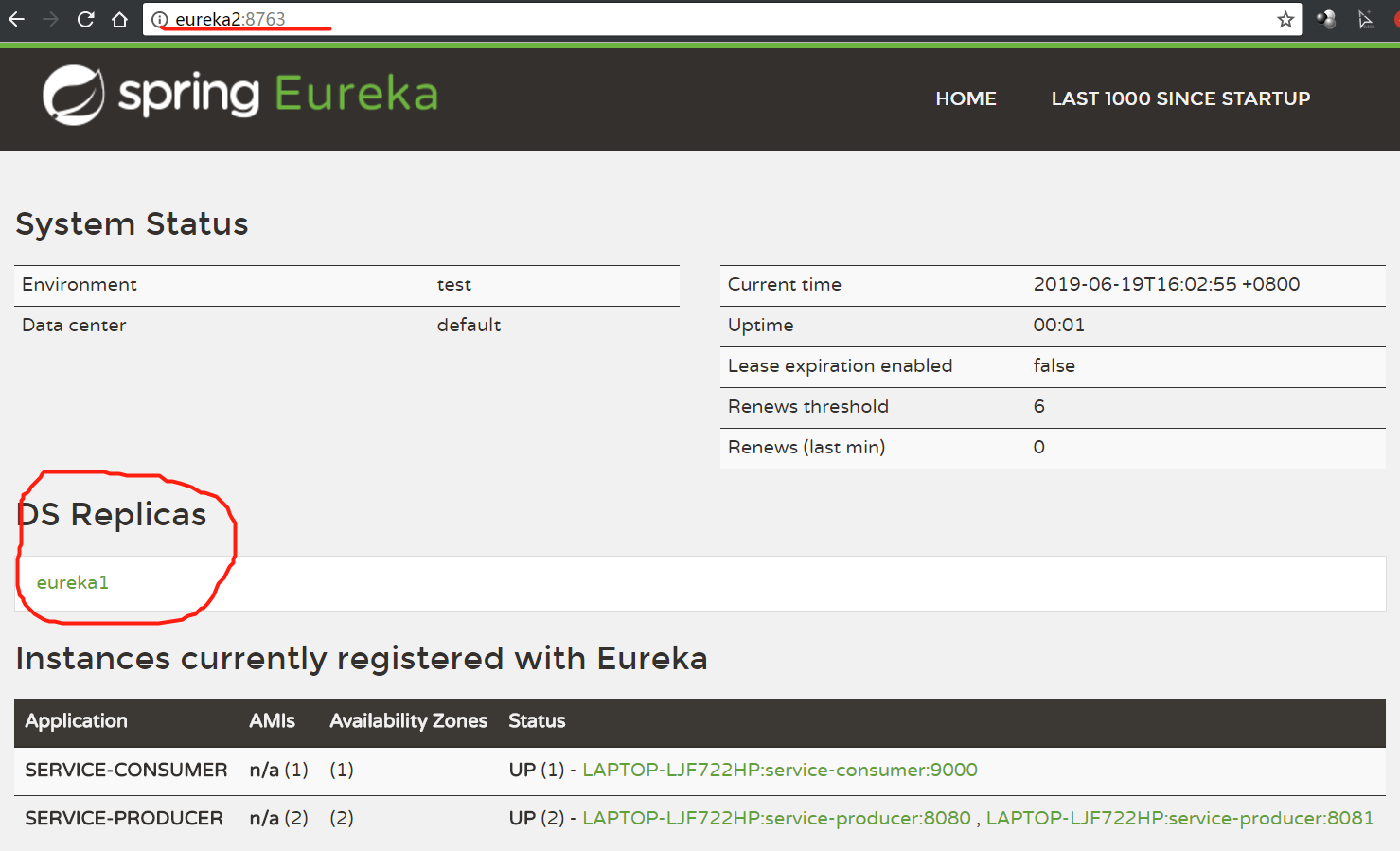

将spring.profiles.active指定为eureka1和eureka2分别运行程序,两个Eureka服务端实例将分别运行在http://eureka1:8762和http://eureka2:8763。

修改服务提供者配置

同理修改application.yml

1 | spring: |

将spring.profiles.active指定为cluster-eureka,并将server.port指定为8080和8081分别启动。

修改服务消费者配置

1 | server: |

将spring.profiles.active指定为cluster-eureka,并运行。

分别查看两个Eureka服务端实例:

当前的调用关系如下图,EurekaSever实例之间会相互注册、同步信息,而每个EurekaClient只需向一个EurekaServer注册:

服务健康自检

在默认情况下, Eureka 的客户端每隔 30 秒会发送一次心跳给服务器端,告知它仍然存活。但是,在实际环境中有可能出现这种情况,客户端表面上可以正常发送心跳,但实际上服务是不可用的。 例如一个需要访问数据的服务提供者,表面上可以正常响应,但是数据库己经无法访问:又如,服务提供者需要访问第三方的服务,而这些服务早己失效。对于这些情况,应当告诉服务器当前客户 的状态,调用者或者其他客户端无法获取这些有问题的实例 。 实现该功能,可以使用 Eureka 的健康检查控制器。

在服务提供者的pom.xml中加入:

1 | <dependency> |

简单起见,在这里不使用Eureka服务器集群。

所以将application.yml中的spring.profiles.active指定为cluster-eureka,server.port为8080

将eureka-service项目的application.yml的spring.profiles.active指定为single

先启动eureka-service,再启动producer。

访问localhost:8761的eureka控制台

再访问http://localhost:8080/actuator/health

自定义应用健康自检

假设需要检查数据库是否连接正常,来判断当前服务是否可用,我们需要做两件事:

- 让客户端自己进行检查,是否能连接数据库 ;

- 将连接数据库的结果与客户端的状态进行关联, 并且将状态告诉Eureka服务器。

实现一个自定义的 Healthlndicator,根据是否能访问数据库,来决定应用自身的健康。

1 |

|

为了简单起见,使用 GreetingController类的 canVisitDb 变量来模拟是否能连接上数据库 ,并且我们可以使用REST接口进行动态改变该值。

1 |

|

接下来,如果服务提供者想把健康状态告诉服务器,还需要实现 HealthCheckHandler。处理器会将应用的健康状态保存到内存中,状态一旦发生改变,就会重新向服务器进行注册,其他的客户端将拿不到这些不可用的实例。

1 |

|

Eureka 中会启动一个定时器,定时刷新本地实例的信息,并且执行“处理器 ”中的 getStatus 方法,再将服务实例的状态“更新”到服务器中。执行以上逻辑的定时器 , 默认 30 秒执行一次,如果想加快看到效果,可以修改 eureka.client.instancelnfoReplicationlntervalSeconds 配置。

启动Eureka服务器,再启动当前带健康自检的服务提供者(8081),和之前的一个不带健康自检的服务提供者(8080),再启动一个服务消费者(9000端口)。

多访问几次localhost:9000,可以看到,两个会轮询调用两个服务提供者:

访问 localhost: 8080/canVisitDb/false,模拟将数据库设为不可用。

再访问 8761 端口在浏览器中访 问 http://localhost:8761 , 可看到服务提供者的状态为 DOWN。

稍等几秒后,访问几次localhost:9000,发现只会出现"hello from provider 8080",也就是说消费者将不再调用状态为DOWN的服务提供者实例。

常用配置

心跳检测

客户端的实例会向服务器发送周期性的心跳,默认30秒一次,可以修改客户端的

eureka.instance.leaseRenewalIntervalInSeconds属性来改变这个时间。服务器端接收心跳请求,如果在一定期限内没有接收到服务实例的心跳,那么会将该实例从注册表中清理掉,其他的客户端将会无法访问这个实例。这个期限默认值为90秒,可以通过修改

eureka.instance.leaseExpirationDurationlnSeconds属性来改变这个值。也就是说,服务器90秒没有收到客户端的心跳,就会将这个实例从列表中清理掉。但需要注意的是,清理注册表有一个定时器在执行,默认是60秒执行一次,如果将leaseExpirationDurationlnSeconds设置为小于60秒,虽然符合删除实例的条件,但是还没到60秒,这个实例将仍然存在注册表中(因为还没有执行清理)。我们可以在Eureka服务器端配置eureka.server.eviction-interval-timer-in-ms属性来修改注册表的清理间隔。需要特别注意,如果开启了自我保护模式,则实例不会被剔除。在测试时,为避免受自我保护模式的影响,建议先关闭自我保护模式,在Eureka服务器端配置:

eureka.server.enable-self-preservation=false

注册表抓取时间

在默认情况下,客户端每隔30秒去服务器端抓取注册表(可用的服务列表),并且将服务器端的注册表保存到本地缓存中。可以通过修改

eureka.client.registryFetchIntervalSeconds配置来改变注册表抓取间隔,但仍然需要考虑性能,改为哪个值比较合适,需要在性能与实时性方面进行权衡。

配置与使用元数据

框架自带的元数据,包括实例 id 、 主机名称、 E 地址等,如果需要自定义元数据并提供给其他客户端使用,可以配置 eureka.instance.metadata-map 属性来指定。元数据都会保存在服务器的注册表中,并且使用简单的方式与客户端进行共享。在正常情况下,自定义元数据不会改变客户端的行为,除非客户端知道这些元数据的含义,以下配置片断使用了元数据 。

1 | eureka : |

配置了一个名为 company-name 的元数据,值为 abc,使用元数据的一方,可以调用DiscoveryClient 的方法获取元数据,如以下代码所示 :

1 |

|

自我保护模式

在开发过程中 ,经常可以在 Eureka 的主界面中看到红色字体的提醒,内容如下:

EMERGENCY! EUREKA MAY BE INCORRECTLY CLAIMING INSTANCES ARE UP WHEN THEY’RE NOT. RENEWALS ARE LESSER THAN THRESHOLD AND HENCE THE INSTANCES ARE NOT BEING EXPIRED JUST TO BE SAFE.

出现该提示意味着 Eureka 进入了自我保护模式。根据前面章节的介绍可知,客户端会定时发送心跳给服务器端,如果心跳的失败率超过一定比例,服务会将这些实例保护起来,并不会马上将其从注册表中剔除。此时对于另外的客户端来说,有可能会拿到一些无法使用的实例,这种情况可能会导致灾难的“蔓延”,这些情况可以使用容错机制予以解决, 关于集群的容错机制,将在后面的章节中讲述 。 在开发过程中,为服务器配置eureka.server.enable-self-preservation属性,将值设置为 false 来关闭自我保护机制 。关闭后再打开 Eureka 主界面,可以看到以下提示信息:

THE SELF PRESERVATION MODE IS TURNED OFF. THIS MAY NOT PROTECT INSTANCE EXPIRY IN CASE OF NETWORK/OTHER PROBLEMS.

自我保护模式己经关闭,在出现网络或者其他问题时,将不会保护过期的实例。

相关原理

以下摘自https://github.com/Netflix/eureka/wiki/Eureka-at-a-glance

Resilience

Eureka clients are built to handle the failure of one or more Eureka servers. Since Eureka clients have the registry cache information in them, they can operate reasonably well, even when all of the eureka servers go down.

Eureka Servers are resilient to other eureka peers going down. Even during a network partition between the clients and servers, the servers have built-in resiliency to prevent a large scale outage.

How different is Eureka from AWS ELB?

AWS Elastic Load Balancer is a load balancing solution for edge services exposed to end-user web traffic. Eureka fills the need for mid-tier load balancing. While you can theoretically put your mid-tier services behind the AWS ELB, in EC2 classic you expose them to the outside world and there by losing all the usefulness of the AWS security groups.

AWS ELB is also a traditional proxy-based load balancing solution whereas with Eureka it is different in that the load balancing happens at the instance/server/host level. The client instances know all the information about which servers they need to talk to. This is a blessing or a curse depending on which way you look at it. If you are looking for a sticky user session based load balancing which AWS now offers, Eureka does not offer a solution out of the box. At Netflix, we prefer our services to be stateless (non-sticky). This facilitates a much better scalability model and Eureka is well suited to address this.

Another important aspect that differentiates proxy-based load balancing from load balancing using Eureka is that your application can be resilient to the outages of the load balancers, since the information regarding the available servers is cached on the client. This does require a small amount of memory, but buys better resiliency.

Understanding-eureka-client-server-communication

以下摘自https://github.com/Netflix/eureka/wiki/Understanding-eureka-client-server-communication

The Eureka client interacts with the server the following ways.

Register

Eureka client registers the information about the running instance to the Eureka server.

Registration happens on first heartbeat (after 30 seconds).

Renew

Eureka client needs to renew the lease by sending heartbeats every 30 seconds. The renewal informs the Eureka server that the instance is still alive. If the server hasn’t seen a renewal for 90 seconds, it removes the instance out of its registry. It is advisable not to change the renewal interval since the server uses that information to determine if there is a wide spread problem with the client to server communication.

Fetch Registry

Eureka clients fetches the registry information from the server and caches it locally. After that, the clients use that information to find other services. This information is updated periodically (every 30 seconds) by getting the delta updates between the last fetch cycle and the current one. The delta information is held longer (for about 3 mins) in the server, hence the delta fetches may return the same instances again. The Eureka client automatically handles the duplicate information.

After getting the deltas, Eureka client reconciles the information with the server by comparing the instance counts returned by the server and if the information does not match for some reason, the whole registry information is fetched again. Eureka server caches the compressed payload of the deltas, whole registry and also per application as well as the uncompressed information of the same. The payload also supports both JSON/XML formats. Eureka client gets the information in compressed JSON format using jersey apache client.

Cancel

Eureka client sends a cancel request to Eureka server on shutdown. This removes the instance from the server’s instance registry thereby effectively taking the instance out of traffic.

Understanding-Eureka-Peer-to-Peer-Communication

以下摘自https://github.com/Netflix/eureka/wiki/Understanding-Eureka-Peer-to-Peer-Communication

Eureka clients tries to talk to Eureka Server in the same zone. If there are problems talking with the server or if the server does not exist in the same zone, the clients fail over to the servers in the other zones.

Once the server starts receiving traffic, all of the operations that is performed on the server is replicated to all of the peer nodes that the server knows about. If an operation fails for some reason, the information is reconciled on the next heartbeat that also gets replicated between servers.

When the Eureka server comes up, it tries to get all of the instance registry information from a neighboring node. If there is a problem getting the information from a node, the server tries all of the peers before it gives up. If the server is able to successfully get all of the instances, it sets the renewal threshold that it should be receiving based on that information. If any time, the renewals falls below the percent configured for that value (below 85% within 15 mins), the server stops expiring instances to protect the current instance registry information.

In Netflix, the above safeguard is called as self-preservation mode and is primarily used as a protection in scenarios where there is a network partition between a group of clients and the Eureka Server. In these scenarios, the server tries to protect the information it already has. There may be scenarios in case of a mass outage that this may cause the clients to get the instances that do not exist anymore. The clients must make sure they are resilient to eureka server returning an instance that is non-existent or un-responsive. The best protection in these scenarios is to timeout quickly and try other servers.

In the case, where the server is not able get the registry information from the neighboring node, it waits for a few minutes (5 mins) so that the clients can register their information. The server tries hard not to provide partial information to the clients there by skewing traffic only to a group of instances and causing capacity issues.

Eureka servers communicate with one another using the same mechanism used between the Eureka client and the server as described here.

What happens during network outages between Peers?

In the case of network outages between peers, following things may happen

- The heartbeat replications between peers may fail and the server detects this situation and enters into a self-preservation mode protecting the current state.

- Registrations may happen in an orphaned server and some clients may reflect new registrations while the others may not.

- The situation autocorrects itself after the network connectivity is restored to a stable state. When the peers are able to communicate fine, the registration information is automatically transferred to the servers that do not have them.

The bottom line is, during the network outages, the server tries to be as resilient as possible, but there is a possibility of clients having different views of the servers during that time.

Zookeeper与Eureka优劣比较

https://blog.csdn.net/zipo/article/details/60588647

https://my.oschina.net/u/3677987/blog/2885801

参考资料

《疯狂Spring Cloud微服务架构实战》

Understanding-eureka-client-server-communication

client‑side discovery and server‑side discovery

self‑registration pattern and third‑party registration pattern

Comment